VSAN Cluster Write Latency

This post does not come with a solution. I did want to take some time to document what I am seeing.

I am experiencing extremely high write latency. This will probably be a good debugging learning session if I can get to the bottom of it. In the picture below you can see write latency is terrible over the last 24hours. It’s causing instability for any VMs running. I am observing a very similar latency graph for each host in my cluster.

I am running a two node stretched cluster with a witness. The two nodes have a dedicated 10G network between them connected in a point to point manner leveraging layer 2 networking. The 10G network is specifically for the VSAN. The management network is 1G and the witness is also connected over the 1G management network using layer 3.

Checking other performance data nothing stands out for the VMs, hosts, or VSAN. One of the two hosts storage is not completely healthy. One device is not showing all the drives as available. My initial reaction is to blame that however it seems odd given such low data usage that the remaining resources would not be able to handle it.

How did the issue present itself?

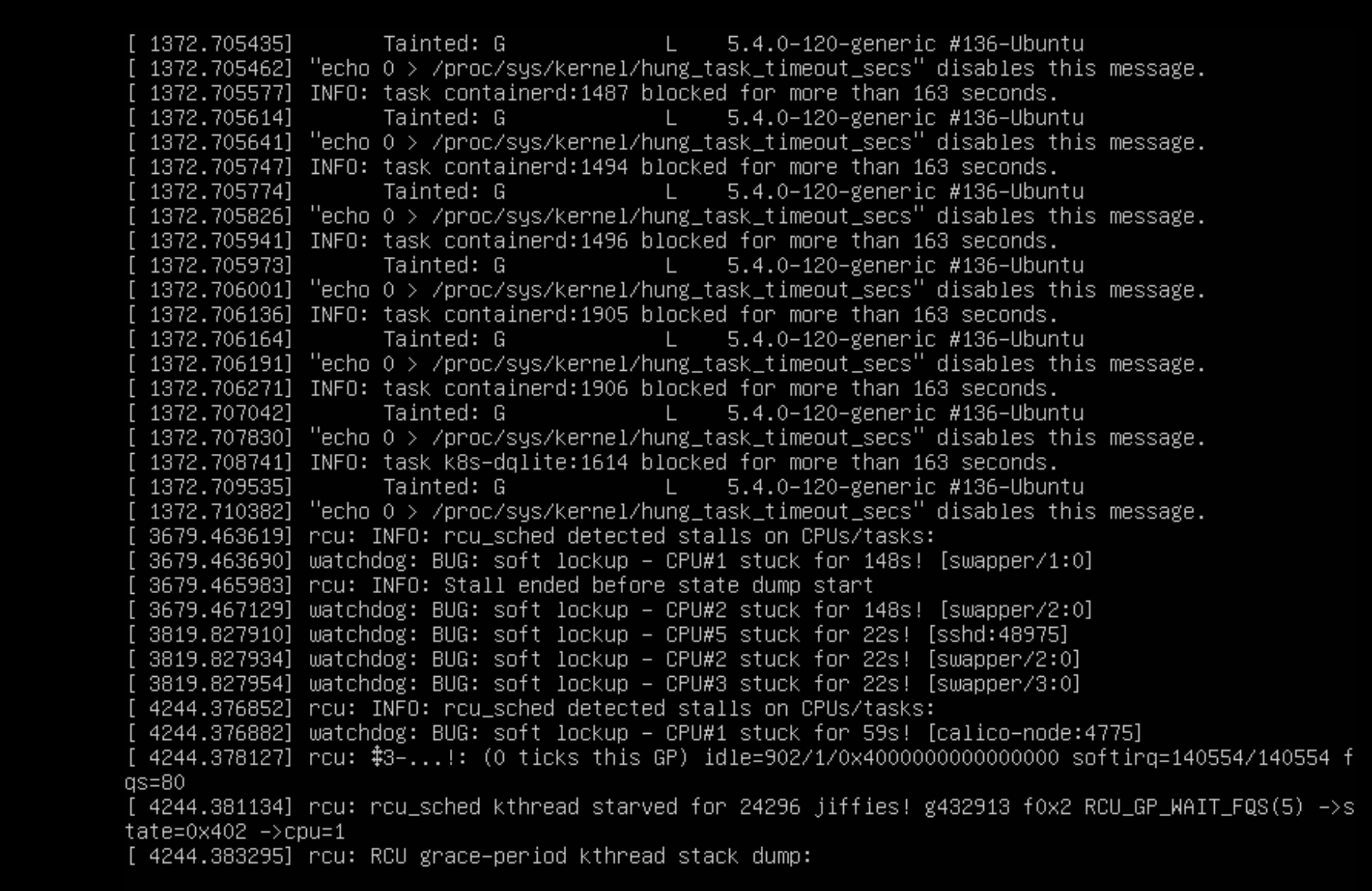

VM’s I was using started locking up. After hanging for a while they would resume. Below is an example of a console for an Ubuntu server.

There are multiple soft lockup durations for varying lengths of time.

WATCHDOG: BUG: soft lockup - CPU#2 stuck for 148s!

This led me to investigate the cluster since it was happening on VMs running on different nodes of the cluster and running different operating systems.

My next steps are to:

- Attempt to get the cluster healthy and other devices showing

- Investigate other performance metrics

- Investigate any alarms or notifications from ESXi related to this

- Run HCIBench